Simple Autoencoder

If you look long enough into the autoencoder, it looks back at you.

The Autoencoder is a fun deep learning model to look into. Its goal is simple: given an input image, we would like to have the same output image.

It’s sort of an identity function for deep learning models, but it is composed of two parts: an encoder and decoder, with the encoder translating the images to a latent space representation and the encoder translating that back to a regular images that we can view.

We are going to make a simple autoencoder with Clojure MXNet for handwritten digits using the MNIST dataset.

The Dataset

We first load up the training data into an iterator that will allow us to cycle through all the images.

1 2 3 4 5 6 | |

Notice there the the input shape is 784. We are purposely flattening out our 28x28 image of a number to just be a one dimensional flat array. The reason is so that we can use a simpler model for the autoencoder.

We also load up the corresponding test data.

1 2 3 4 5 6 | |

When we are working with deep learning models we keep the training and the test data separate. When we train the model, we won’t use the test data. That way we can evaluate it later on the unseen test data.

The Model

Now we need to define the layers of the model. We know we are going to have an input and an output. The input will be the array that represents the image of the digit and the output will also be an array which is reconstruction of that image.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | |

From the model above we can see the input (image) being passed through simple layers of encoder to its latent representation, and then boosted back up from the decoder back into an output (image). It goes through the pleasingly symmetric transformation of:

784 (image) -> 100 -> 50 -> 50 -> 100 -> 784 (output)

We can now construct the full model with the module api from clojure-mxnet.

1 2 3 4 5 6 7 | |

Notice that when we are binding the data-shapes and label-shapes we are using only the data from our handwritten digit dataset, (the images), and not the labels. This will ensure that as it trains it will seek to recreate the input image for the output image.

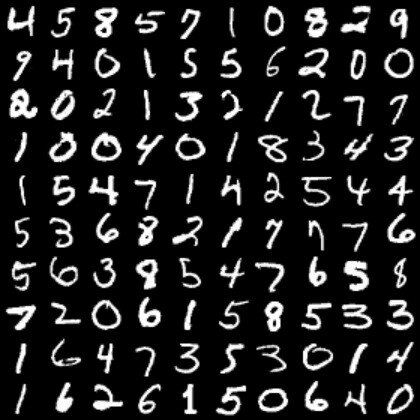

Before Training

Before we start our training, let’s get a baseline of what the original images look like and what the output of the untrained model is.

To look at the original images we can take the first training batch of 100 images and visualize them. Since we are initially using the flattened [784] image representation. We need to reshape it to the 28x28 image that we can recognize.

1 2 3 4 | |

We can also do the same visualization with the test batch of data images by putting them into the predict-batch and using our model.

1 2 3 4 5 | |

They are not anything close to recognizable as numbers.

Training

The next step is to train the model on the data. We set up a training function to step through all the batches of data.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

For each batch of 100 images it is doing the following:

- Run the forward pass of the model with both the data and label being the image

- Update the accuracy of the model with the

mse(mean squared error metric) - Do the backward computation

- Update the model according to the optimizer and the forward/backward computation.

Let’s train it for 3 epochs.

1 2 3 4 5 6 | |

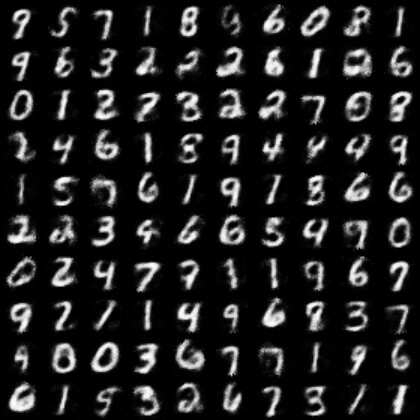

After training

We can check the test images again and see if they look better.

1 2 3 4 5 | |

Much improved! They definitely look like numbers.

Wrap up

We’ve made a simple autoencoder that can take images of digits and compress them down to a latent space representation the can later be decoded into the same image.

If you want to check out the full code for this example, you can find it here.

Stay tuned. We’ll take this example and build on it in future posts.